Updated at: 2022-12-09 03:49:50

Search condition includes: fulltext retrieval, phrase query, field value retrieval, logical operator retrieval and SPL command retrieval.

► Fulltext Retrieval:

Full-text retrieval supports space self-parsing, and space is parsed with AND logic to realize multiple-keyword combination search. Search results and keywords can be accurately matched, and the matched keywords are highlighted.

For keyword retrieving, the system is not case sensitive; For querying by multiple keywords, the order of keywords is not distinguished.

_15.png) Note: If the search keyword itself contains spaces, escape character "" or double quotation marks should be added, and the search results will be sorted by time by default.

Note: If the search keyword itself contains spaces, escape character "" or double quotation marks should be added, and the search results will be sorted by time by default.

Common examples of full-text retrieval scenarios are as follows:

_15.png) Note: Stop words refer to some words that will be automatically filtered out before or after processing natural language data (or text) in order to save storage space and improve search efficiency in information retrieval. These words are called stop words. If a stop word has a special meaning in the log information, the system will perform search action on it. Stop words are usually article, preposition, adverb or conjunction in English. Common stop words are: a, an, and, are, as, at, be, but, by, for, if, in, into, is, it, no, not, of, on, or, such, that, the, their, then, there, these, they, this, to, was, will, with.

Note: Stop words refer to some words that will be automatically filtered out before or after processing natural language data (or text) in order to save storage space and improve search efficiency in information retrieval. These words are called stop words. If a stop word has a special meaning in the log information, the system will perform search action on it. Stop words are usually article, preposition, adverb or conjunction in English. Common stop words are: a, an, and, are, as, at, be, but, by, for, if, in, into, is, it, no, not, of, on, or, such, that, the, their, then, there, these, they, this, to, was, will, with.

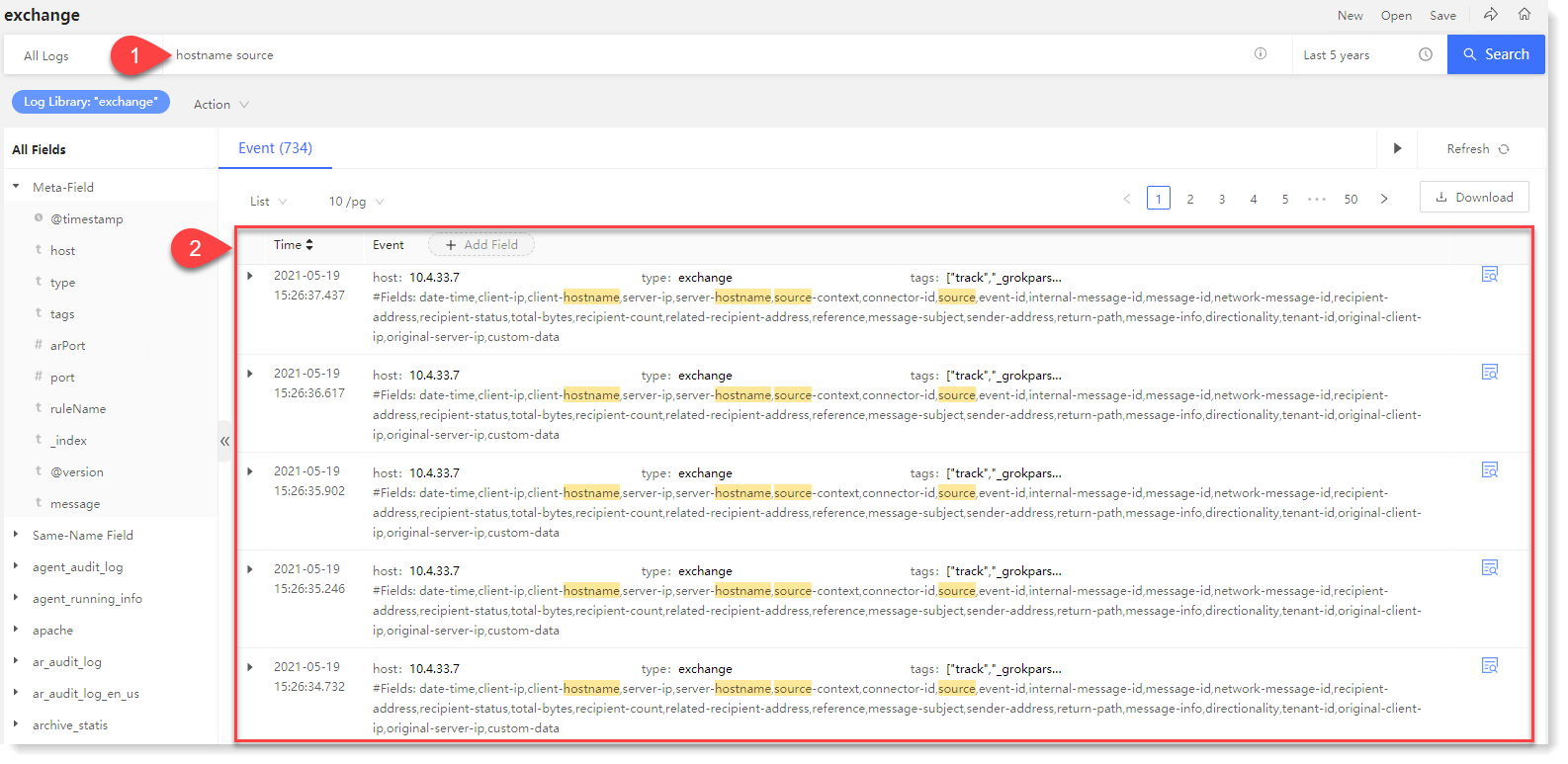

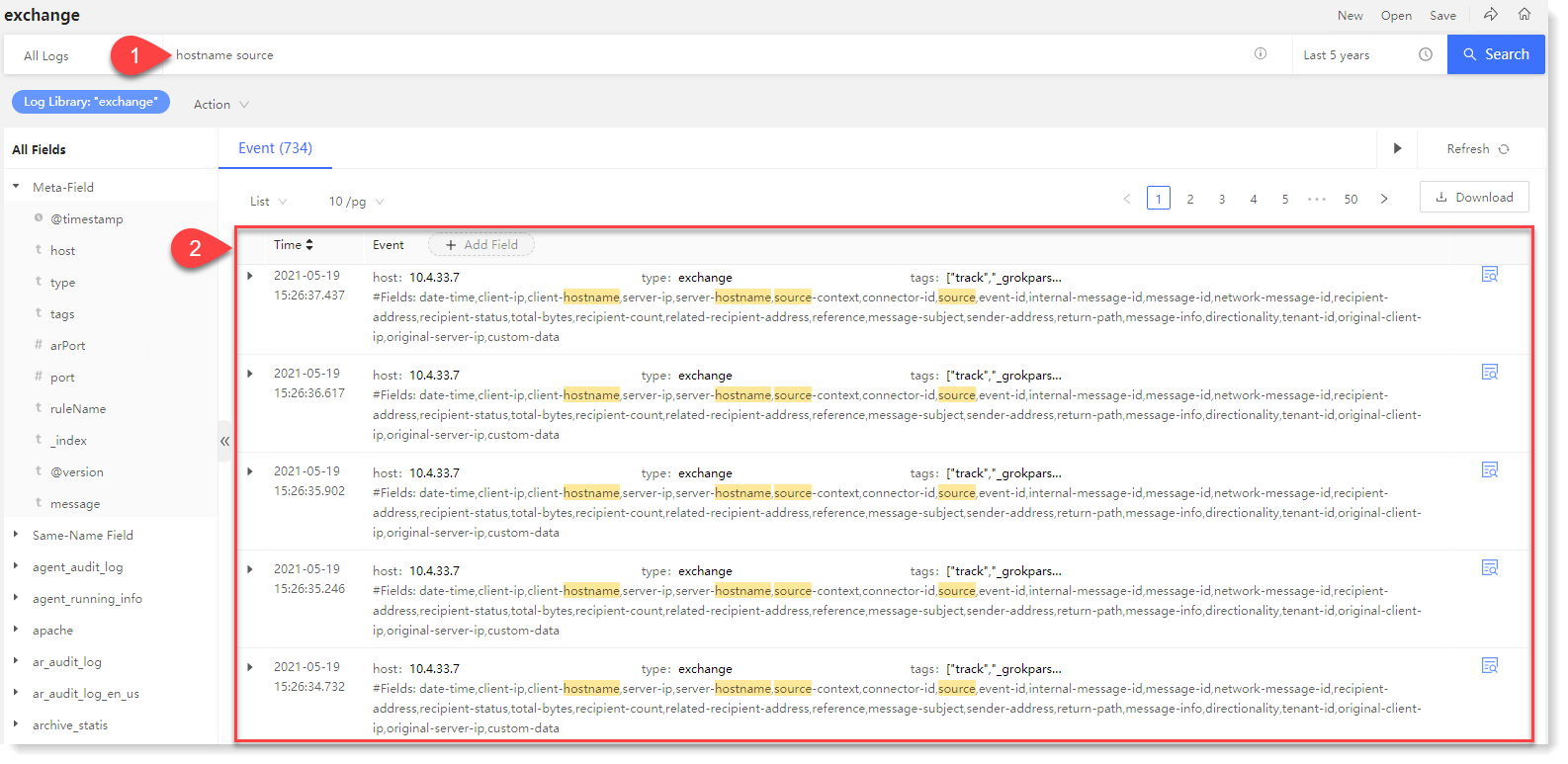

• Example: After entering "hostname source" and searching, the result will contain both hostname and source, and the matched words will be highlighted, as follows:

► Phrase Retrieval

► Phrase Retrieval

For Phrase Retrieval, you need to enclose the phrase in double quotes and the result is not case sensitive.

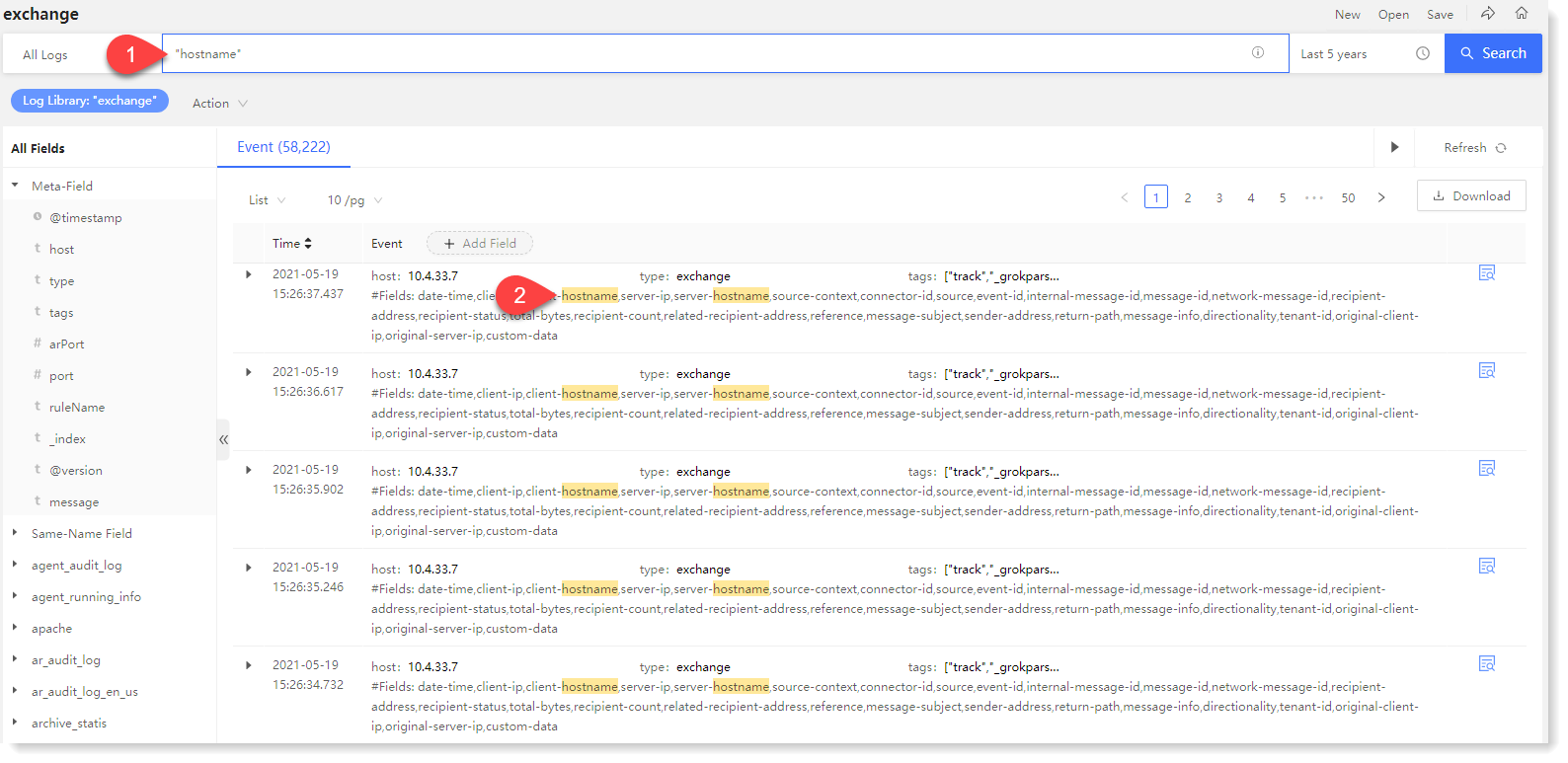

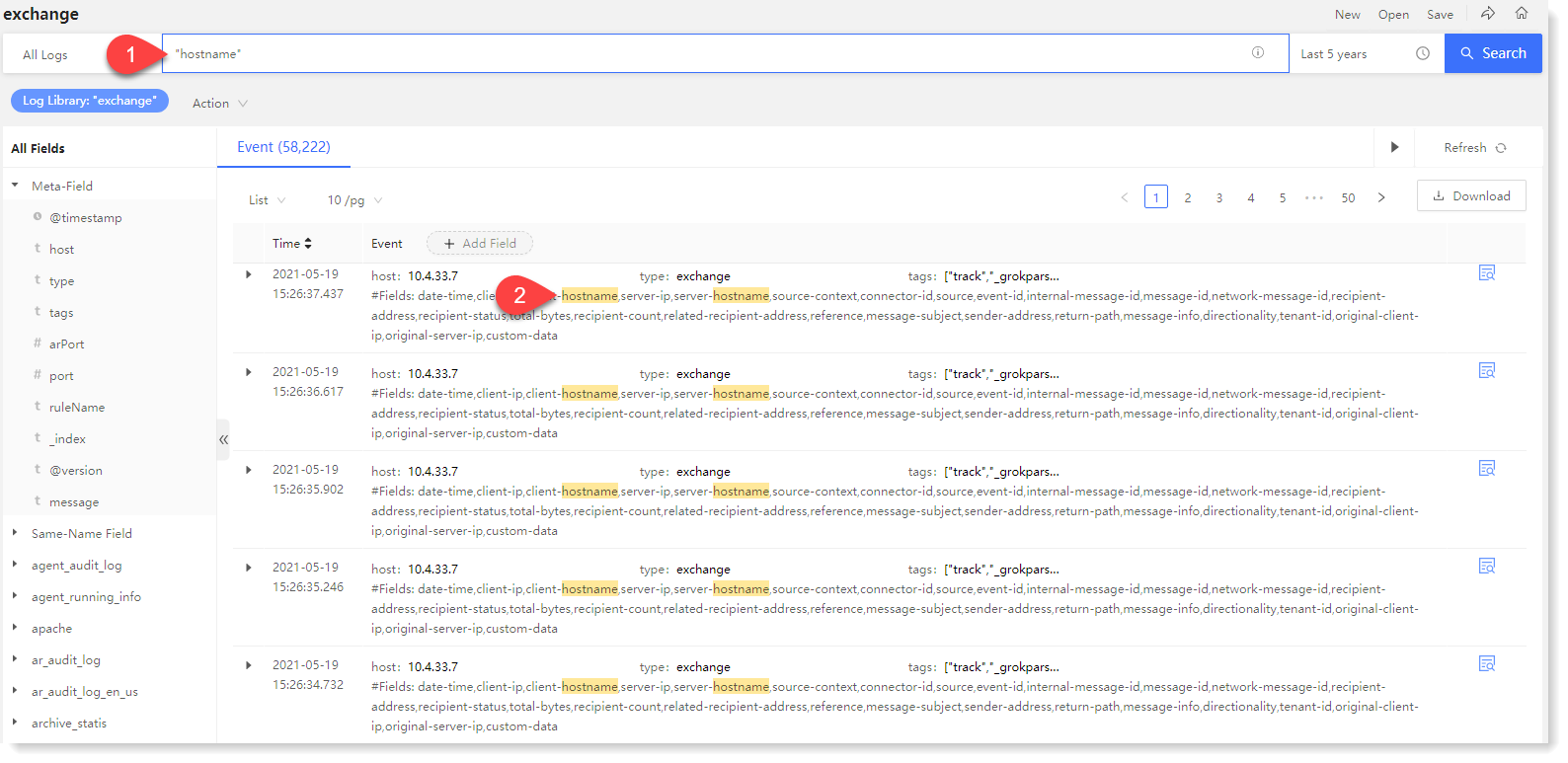

• Example: To query "hostname", the matched log must also contain "hostname".

► Field Value Retrieval

► Field Value Retrieval

To filter data according to specific field value, users can use the field value retrieval method to filter data.

The format is: field: value, of which the field is field, the field type is string, the search is case sensitive, and it is not allowed to use the mark ? * etc.; value can be a string or a numeric value, and the string is case sensitive, and the * character can be used.

• Example: type: exchange: Means to filter the data of log type exchange.

► Logical Operator Retrieval

► Logical Operator Retrieval

Logical operators include: AND, OR, NOT, (), where AND, OR, NOT must be uppercase. Search by logical operators can improve search efficiency.

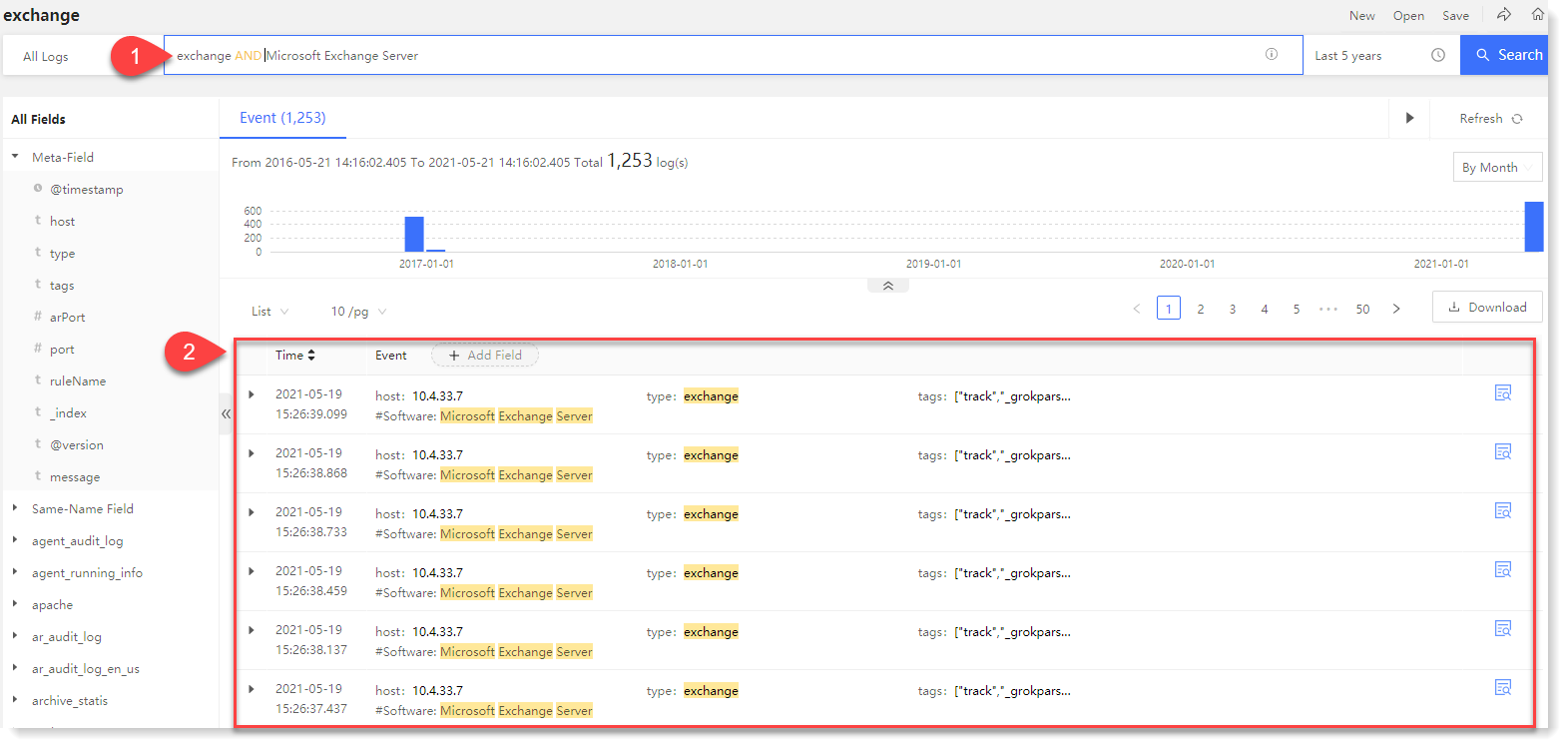

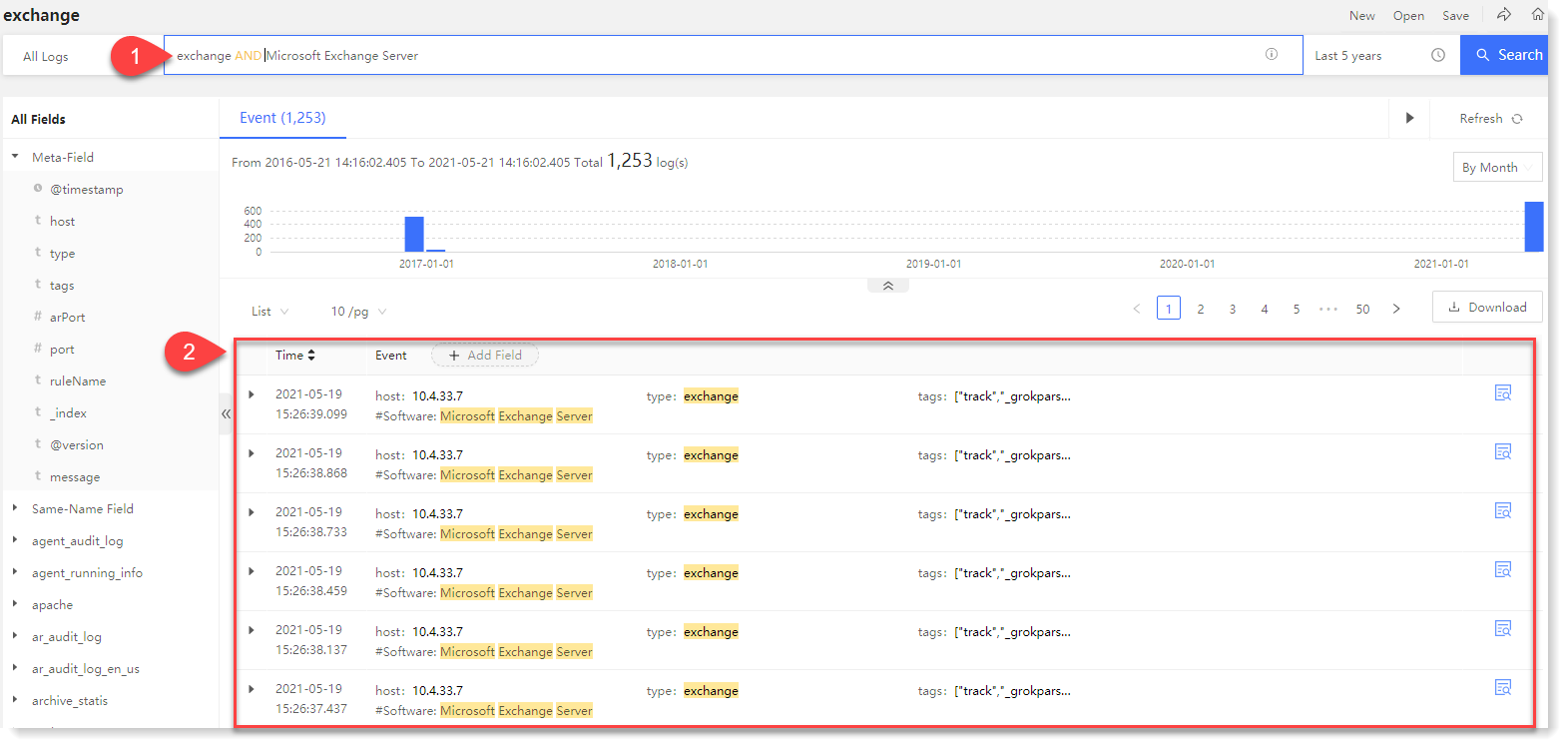

• Example 1: exchange AND Microsoft Exchange Server: Means exchange and Microsoft Exchange Server must be available at the same time in the log to match the search condition. AND can be defaulted;

• Example 2: Test OR Agent: Means only Test and Agent must be available at the same time in the log to match the search condition.

• Example 3: NOT Test: Means that only logs that do not contain the Test field match the search condition;

• Example 4: Test AND (Agent OR aix): Means that the log must contain the Test and any one of agent and aix to match the search condition, where () can include changeable Boolean algorithm.

► SPL Command

SPL commands include rename command, parse command, join command, top command, and stats command. The combination of commands is supported, and log data field can be renamed, dynamically extracted, and analyzed for correlation.

♦ Rename command

It is mainly to solve the problem of reprocessing data that has been aggregated, and you can rename the fields as needed.

_15.png) Note: The renaming takes effect only when the rename command is running, but will be invalid after the end of running.

Note: The renaming takes effect only when the rename command is running, but will be invalid after the end of running.

Format: * | rename field as renamed name

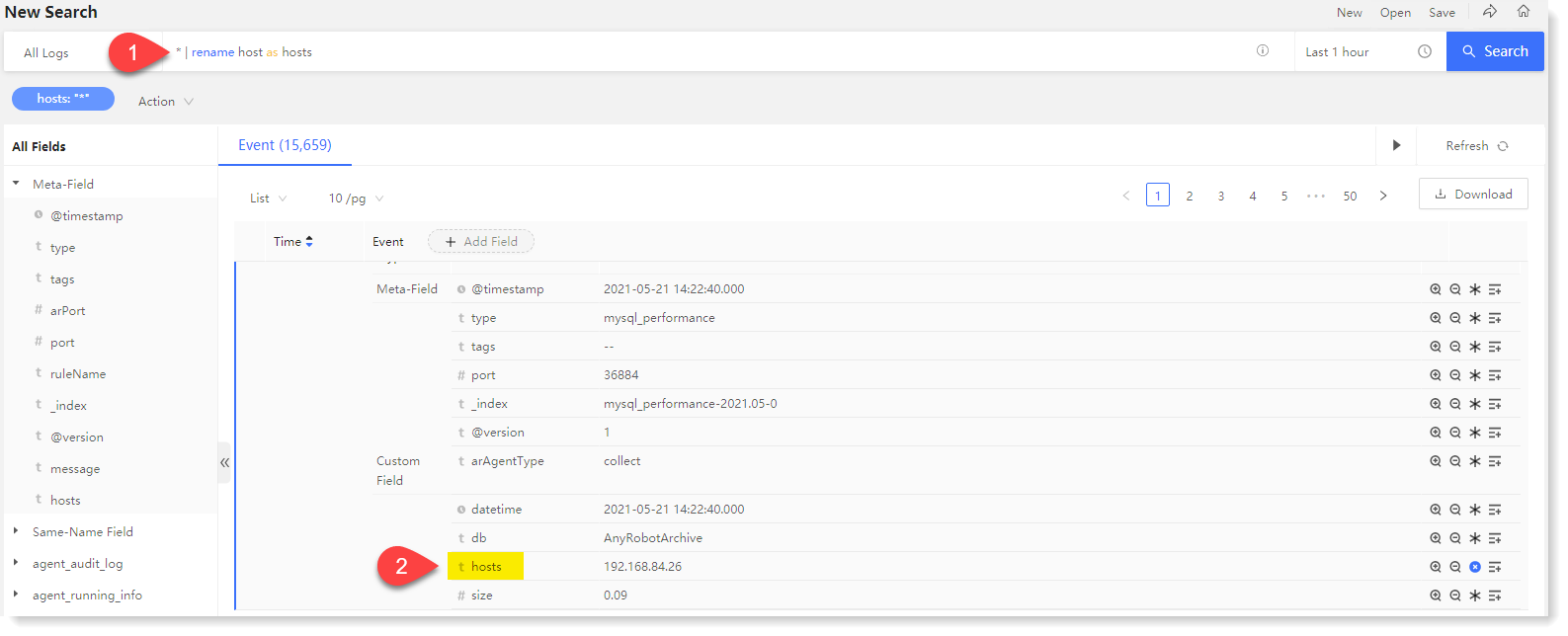

• Usage 1: Rename one field

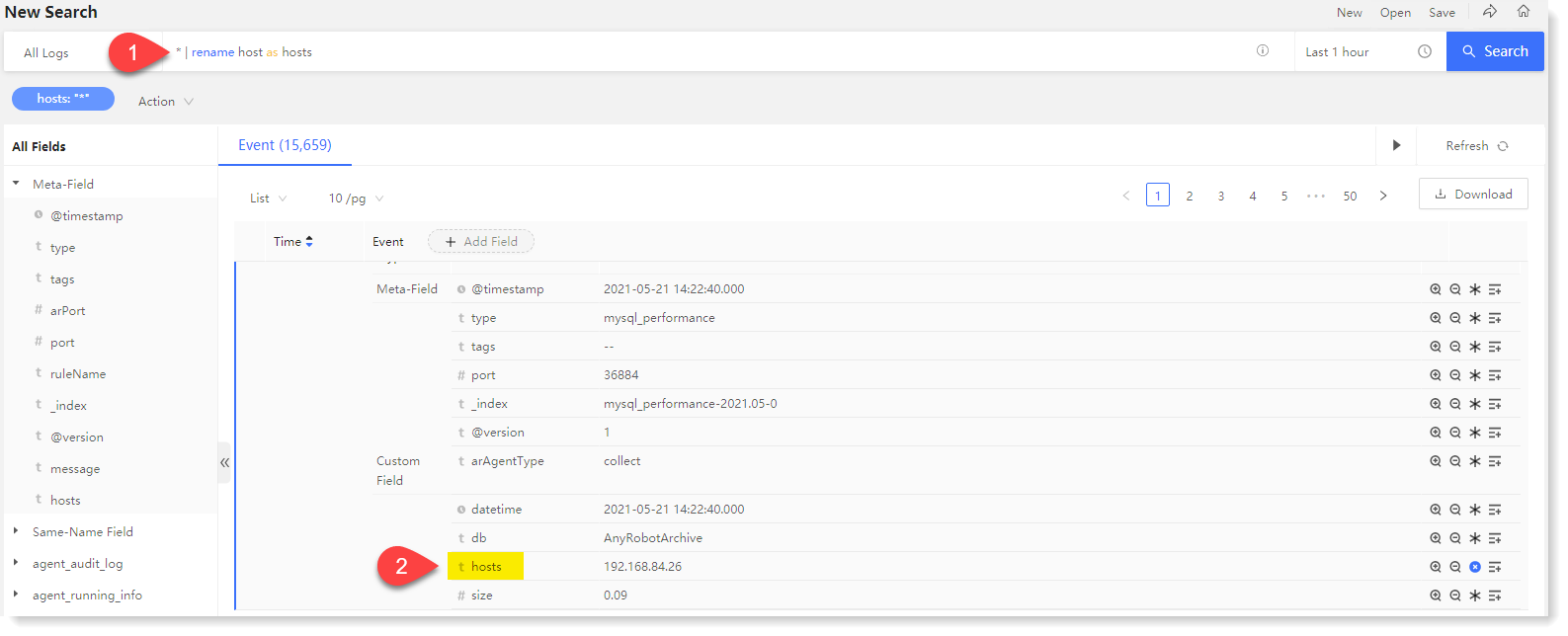

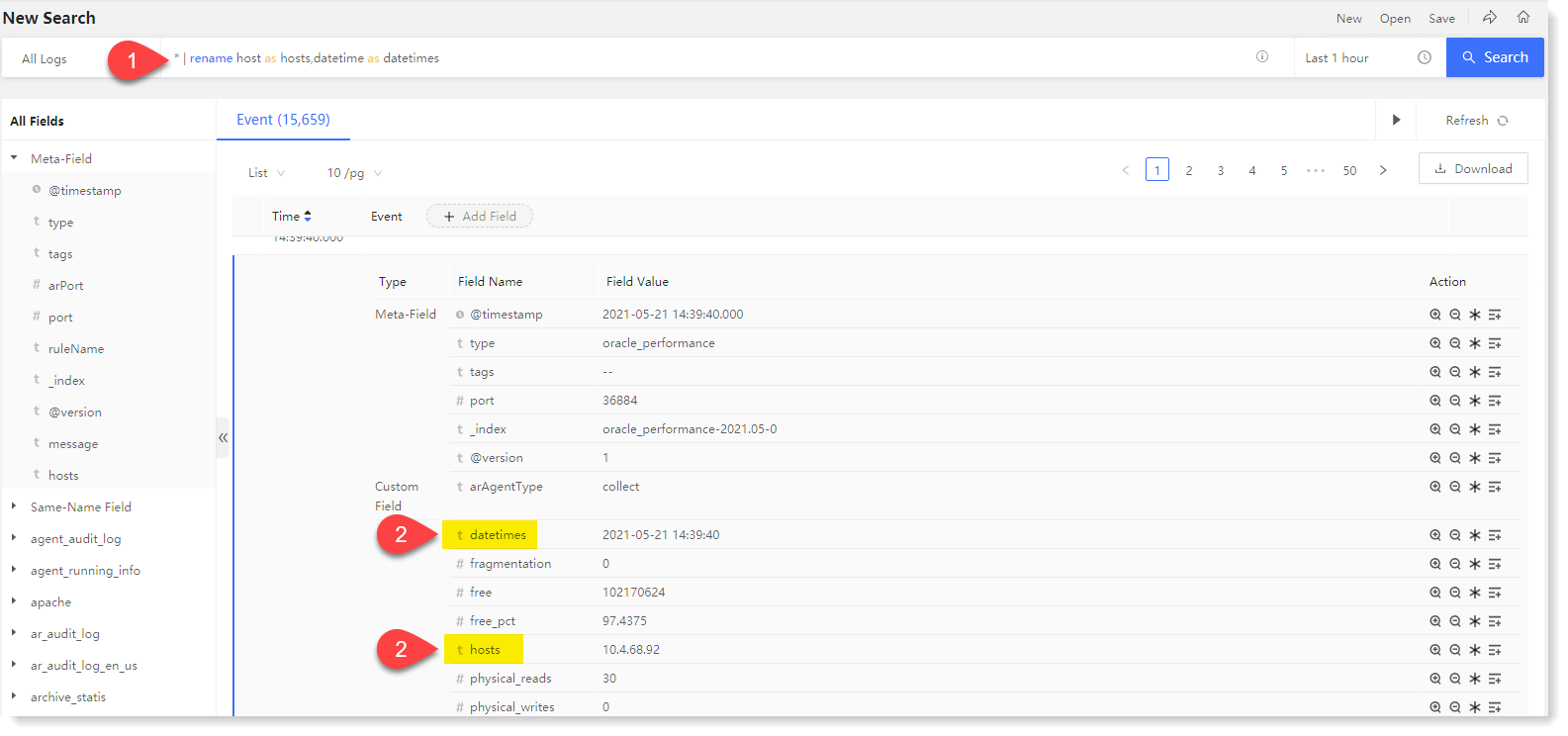

Example 1: Enter: * | rename host as hosts, means to rename the field "host" to "hosts", as follows:

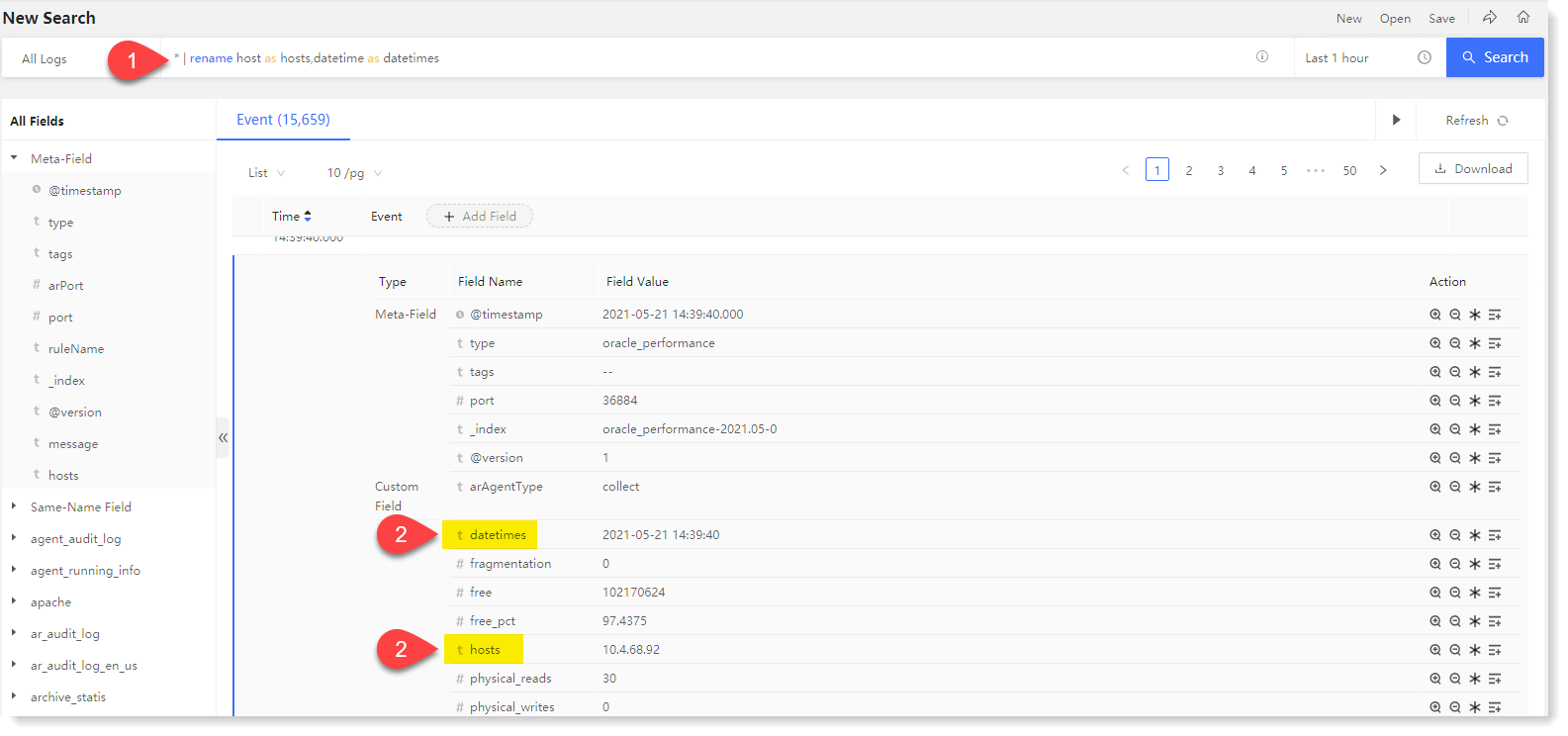

• Usage 2: Rename multiple fields

• Usage 2: Rename multiple fields

Example 2: Enter: * | rename host as hosts, datatime as datatimes, means to rename the field "host" to "hosts", "datatime" to "datatimes", as follows:

► Parse command

♦ It is to extract fields dynamically in searching, and filter the data in the system by newly extracted fields.

_15.png) Note: The newly extracted fields in regular expression regex cannot have repeated name with existing fields.

Note: The newly extracted fields in regular expression regex cannot have repeated name with existing fields.

Format: * | parse ""

• Usage 1: Field extraction of original log

Example 1: Enter: * | parse "(?d+.d+.d+.d+)", is to extract the log data ip information and generate the ip field. d+)", which is used to extract the log data ip information and generate the ip field, and to extract the ip field values uniformly.

• Usage 2: Field re-extraction of parsed fields

Example: Enter: * | parse @timestamp "(?S+)s(?d{2}).*", is to extract the "time" field twice for "date" and "hour" respectively.

• Usage 3: Use in conjunction with the Rename command

Example 3: Enter: * | parse @timestamp "(?S+)s(?d{2})*" | rename source as sources, is to extract the @timestamp field and rename "source" to "sources".

► Join command

It is to provide convenient data association for temporary analysis and data cleaning. You can associate the result (field set) of the main search and the result (field set) of a sub-search with the key field by Join command in AnyRobot system.

Format: * | join type=left field-list [sub-search]

• type: connection type, including inner connection and left connection, and the default is left connection (non-mandatory). The inner connection means that there must be a record of the value of the related field in the database table for main search and the sub-search before the data can be retrieved. The left connection means that there must be a record of the value of the related field in the database for main search before the data can be retrieved.

• field-list: Connection field, mandatory

• sub-search: Mandatory, and the result of sub-search is linked to the main search.

• Usage 1: Use in conjunction with the Rename command to rename related field

Example: enter: type: "as access log" | join type=left error ID [type: "https alert forwarding"] | rename error ID as error IDs, means to make left connection between the data containing an "error ID" in the "as access log" and the data containing an "error ID" in "https alert forwarding", and rename "error IDs" are renamed to "error IDs";

• Usage 2: Extract the field with the parse command, then use the join command to associate the field

Example 2: Enter: type: "as access log" | parse user “(?.*)" | join type=inner username [type: "as action log"] | rename user as username.

► Top command

It is to make statistics of the set of the most frequent values in all the values of the specified field. The return result contains the count of the value and its percentage. It supports the statistics of frequency after grouping by field.

► Stats command

Stats is a basic command, as a prerequisite for performing search and visual reconstruction. It is to make aggregated statistics of the search results, including: Count, Max, Min, avg, Sum, Percent Distribution, Percentile Rank.

You can also use the by statement to classify the fields specified in the by statement, and then make aggregated statistics in each classification. The returned result is the corresponding result set for each classification.

Format: *| stats stats-function (field1, field2...) AS field BY field-list

• Stats-function: eval function expression

• (field1, field2...): aggregated fields

• AS field: Specify a field to store the result set of aggregated statistics

• BY field-list: Optional parameter, to set the specified field for aggregated statistics calculation

State-function is summarized as follows:

_15.png) Note: min、max、avg、extended_stats、sum、percentiles、percentile_ranks functions only allow the statistics of numeric fields.

Note: min、max、avg、extended_stats、sum、percentiles、percentile_ranks functions only allow the statistics of numeric fields.

• Usage 1: Returns the average transfer rate for each host

Example 1: sourcetype=access* | stats avg(kbps) BY host

• Usage 2: Search the access log to calculate the total number of top 100 values in the "referer_domain" field

Example 2: Input: sourcetype=access_combined | top limit=100 referer_domain | stats sum(count) AS total

• Usage 3: For a field [sales] with field values of 15, 15, 30, 40, 50:

► Fulltext Retrieval:

Full-text retrieval supports space self-parsing, and space is parsed with AND logic to realize multiple-keyword combination search. Search results and keywords can be accurately matched, and the matched keywords are highlighted.

For keyword retrieving, the system is not case sensitive; For querying by multiple keywords, the order of keywords is not distinguished.

_15.png) Note: If the search keyword itself contains spaces, escape character "" or double quotation marks should be added, and the search results will be sorted by time by default.

Note: If the search keyword itself contains spaces, escape character "" or double quotation marks should be added, and the search results will be sorted by time by default.Common examples of full-text retrieval scenarios are as follows:

_15.png) Note: Stop words refer to some words that will be automatically filtered out before or after processing natural language data (or text) in order to save storage space and improve search efficiency in information retrieval. These words are called stop words. If a stop word has a special meaning in the log information, the system will perform search action on it. Stop words are usually article, preposition, adverb or conjunction in English. Common stop words are: a, an, and, are, as, at, be, but, by, for, if, in, into, is, it, no, not, of, on, or, such, that, the, their, then, there, these, they, this, to, was, will, with.

Note: Stop words refer to some words that will be automatically filtered out before or after processing natural language data (or text) in order to save storage space and improve search efficiency in information retrieval. These words are called stop words. If a stop word has a special meaning in the log information, the system will perform search action on it. Stop words are usually article, preposition, adverb or conjunction in English. Common stop words are: a, an, and, are, as, at, be, but, by, for, if, in, into, is, it, no, not, of, on, or, such, that, the, their, then, there, these, they, this, to, was, will, with.• Example: After entering "hostname source" and searching, the result will contain both hostname and source, and the matched words will be highlighted, as follows:

► Phrase Retrieval

► Phrase RetrievalFor Phrase Retrieval, you need to enclose the phrase in double quotes and the result is not case sensitive.

• Example: To query "hostname", the matched log must also contain "hostname".

► Field Value Retrieval

► Field Value RetrievalTo filter data according to specific field value, users can use the field value retrieval method to filter data.

The format is: field: value, of which the field is field, the field type is string, the search is case sensitive, and it is not allowed to use the mark ? * etc.; value can be a string or a numeric value, and the string is case sensitive, and the * character can be used.

• Example: type: exchange: Means to filter the data of log type exchange.

► Logical Operator Retrieval

► Logical Operator RetrievalLogical operators include: AND, OR, NOT, (), where AND, OR, NOT must be uppercase. Search by logical operators can improve search efficiency.

• Example 1: exchange AND Microsoft Exchange Server: Means exchange and Microsoft Exchange Server must be available at the same time in the log to match the search condition. AND can be defaulted;

• Example 2: Test OR Agent: Means only Test and Agent must be available at the same time in the log to match the search condition.

• Example 3: NOT Test: Means that only logs that do not contain the Test field match the search condition;

• Example 4: Test AND (Agent OR aix): Means that the log must contain the Test and any one of agent and aix to match the search condition, where () can include changeable Boolean algorithm.

► SPL Command

SPL commands include rename command, parse command, join command, top command, and stats command. The combination of commands is supported, and log data field can be renamed, dynamically extracted, and analyzed for correlation.

♦ Rename command

It is mainly to solve the problem of reprocessing data that has been aggregated, and you can rename the fields as needed.

_15.png) Note: The renaming takes effect only when the rename command is running, but will be invalid after the end of running.

Note: The renaming takes effect only when the rename command is running, but will be invalid after the end of running.Format: * | rename field as renamed name

• Usage 1: Rename one field

Example 1: Enter: * | rename host as hosts, means to rename the field "host" to "hosts", as follows:

• Usage 2: Rename multiple fields

• Usage 2: Rename multiple fieldsExample 2: Enter: * | rename host as hosts, datatime as datatimes, means to rename the field "host" to "hosts", "datatime" to "datatimes", as follows:

► Parse command

♦ It is to extract fields dynamically in searching, and filter the data in the system by newly extracted fields.

_15.png) Note: The newly extracted fields in regular expression regex cannot have repeated name with existing fields.

Note: The newly extracted fields in regular expression regex cannot have repeated name with existing fields.Format: * | parse ""

• Usage 1: Field extraction of original log

Example 1: Enter: * | parse "(?d+.d+.d+.d+)", is to extract the log data ip information and generate the ip field. d+)", which is used to extract the log data ip information and generate the ip field, and to extract the ip field values uniformly.

• Usage 2: Field re-extraction of parsed fields

Example: Enter: * | parse @timestamp "(?S+)s(?d{2}).*", is to extract the "time" field twice for "date" and "hour" respectively.

• Usage 3: Use in conjunction with the Rename command

Example 3: Enter: * | parse @timestamp "(?S+)s(?d{2})*" | rename source as sources, is to extract the @timestamp field and rename "source" to "sources".

► Join command

It is to provide convenient data association for temporary analysis and data cleaning. You can associate the result (field set) of the main search and the result (field set) of a sub-search with the key field by Join command in AnyRobot system.

Format: * | join type=left field-list [sub-search]

• type: connection type, including inner connection and left connection, and the default is left connection (non-mandatory). The inner connection means that there must be a record of the value of the related field in the database table for main search and the sub-search before the data can be retrieved. The left connection means that there must be a record of the value of the related field in the database for main search before the data can be retrieved.

• field-list: Connection field, mandatory

• sub-search: Mandatory, and the result of sub-search is linked to the main search.

• Usage 1: Use in conjunction with the Rename command to rename related field

Example: enter: type: "as access log" | join type=left error ID [type: "https alert forwarding"] | rename error ID as error IDs, means to make left connection between the data containing an "error ID" in the "as access log" and the data containing an "error ID" in "https alert forwarding", and rename "error IDs" are renamed to "error IDs";

• Usage 2: Extract the field with the parse command, then use the join command to associate the field

Example 2: Enter: type: "as access log" | parse user “(?.*)" | join type=inner username [type: "as action log"] | rename user as username.

► Top command

It is to make statistics of the set of the most frequent values in all the values of the specified field. The return result contains the count of the value and its percentage. It supports the statistics of frequency after grouping by field.

► Stats command

Stats is a basic command, as a prerequisite for performing search and visual reconstruction. It is to make aggregated statistics of the search results, including: Count, Max, Min, avg, Sum, Percent Distribution, Percentile Rank.

You can also use the by statement to classify the fields specified in the by statement, and then make aggregated statistics in each classification. The returned result is the corresponding result set for each classification.

Format: *| stats stats-function (field1, field2...) AS field BY field-list

• Stats-function: eval function expression

• (field1, field2...): aggregated fields

• AS field: Specify a field to store the result set of aggregated statistics

• BY field-list: Optional parameter, to set the specified field for aggregated statistics calculation

State-function is summarized as follows:

_15.png) Note: min、max、avg、extended_stats、sum、percentiles、percentile_ranks functions only allow the statistics of numeric fields.

Note: min、max、avg、extended_stats、sum、percentiles、percentile_ranks functions only allow the statistics of numeric fields.• Usage 1: Returns the average transfer rate for each host

Example 1: sourcetype=access* | stats avg(kbps) BY host

• Usage 2: Search the access log to calculate the total number of top 100 values in the "referer_domain" field

Example 2: Input: sourcetype=access_combined | top limit=100 referer_domain | stats sum(count) AS total

• Usage 3: For a field [sales] with field values of 15, 15, 30, 40, 50:

• Count: Return the count of the target field (any type of field). stats count (sales) = 5

• Max: Return the maximum field value in the target field (numeric field). stats max (sales) = 50

• Min: Return the minimum field value in the target field (numeric field). stats min (sales) = 15

• Avg: Return the average field value in the target field (numeric field). stats avg (sales) = 30

• Sum: Return the sum value of the target field (numeric field). stats sum (sales) = 150

• Cardinality: Return the deduplication count of the target field (any type of field). stats cardinality (sales) = 4

• Extended_stats, returns the count, maximum, minimum, average, sum, squared sum, variance, standard deviation of the target fields (numeric fields). stats extended_stats (sales) = 5 (count), 50 (maximum), 15 (minimum), 30 (average), 150 (sum), 5450 (squared sum), 237.5 (variance), 15.41104 (standard deviation)

• Percentiles: Return a custom percentile of the target field (numeric field). stats percentiles (sales, 70) = 38, meaning that 70% of sales are less than 38. stats percentiles (sales, 20, 50, 90) =15, 30, 46, meaning that 20% of sales are less than 15; 50% of sales are less than 30; 90% of sales are less than 46.

• Percentile_ranks: Return the percentile corresponding to the value of the specified field (numeric). stats percentile_ranks (sales, 50) = 100%, meaning that sales 50 corresponds to the percentile of 100%, i.e., 100% sales are less than 50.

• Max: Return the maximum field value in the target field (numeric field). stats max (sales) = 50

• Min: Return the minimum field value in the target field (numeric field). stats min (sales) = 15

• Avg: Return the average field value in the target field (numeric field). stats avg (sales) = 30

• Sum: Return the sum value of the target field (numeric field). stats sum (sales) = 150

• Cardinality: Return the deduplication count of the target field (any type of field). stats cardinality (sales) = 4

• Extended_stats, returns the count, maximum, minimum, average, sum, squared sum, variance, standard deviation of the target fields (numeric fields). stats extended_stats (sales) = 5 (count), 50 (maximum), 15 (minimum), 30 (average), 150 (sum), 5450 (squared sum), 237.5 (variance), 15.41104 (standard deviation)

• Percentiles: Return a custom percentile of the target field (numeric field). stats percentiles (sales, 70) = 38, meaning that 70% of sales are less than 38. stats percentiles (sales, 20, 50, 90) =15, 30, 46, meaning that 20% of sales are less than 15; 50% of sales are less than 30; 90% of sales are less than 46.

• Percentile_ranks: Return the percentile corresponding to the value of the specified field (numeric). stats percentile_ranks (sales, 50) = 100%, meaning that sales 50 corresponds to the percentile of 100%, i.e., 100% sales are less than 50.

< Previous:

Next: >