Updated at: 2022-12-09 03:49:50

It is to extract archived cold data and re-parse it and then store it in Elasticsearch to recover the archived cold data into AnyRobot as hot data for search and analysis, as follows:

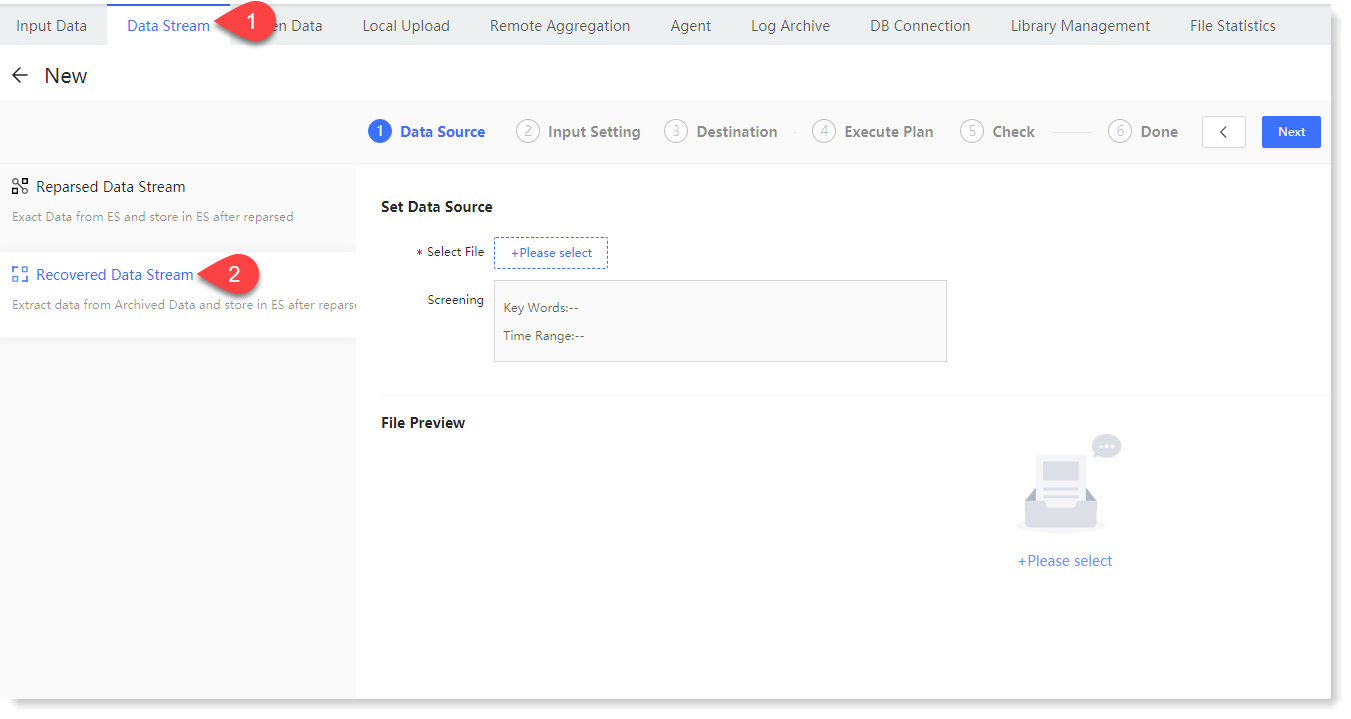

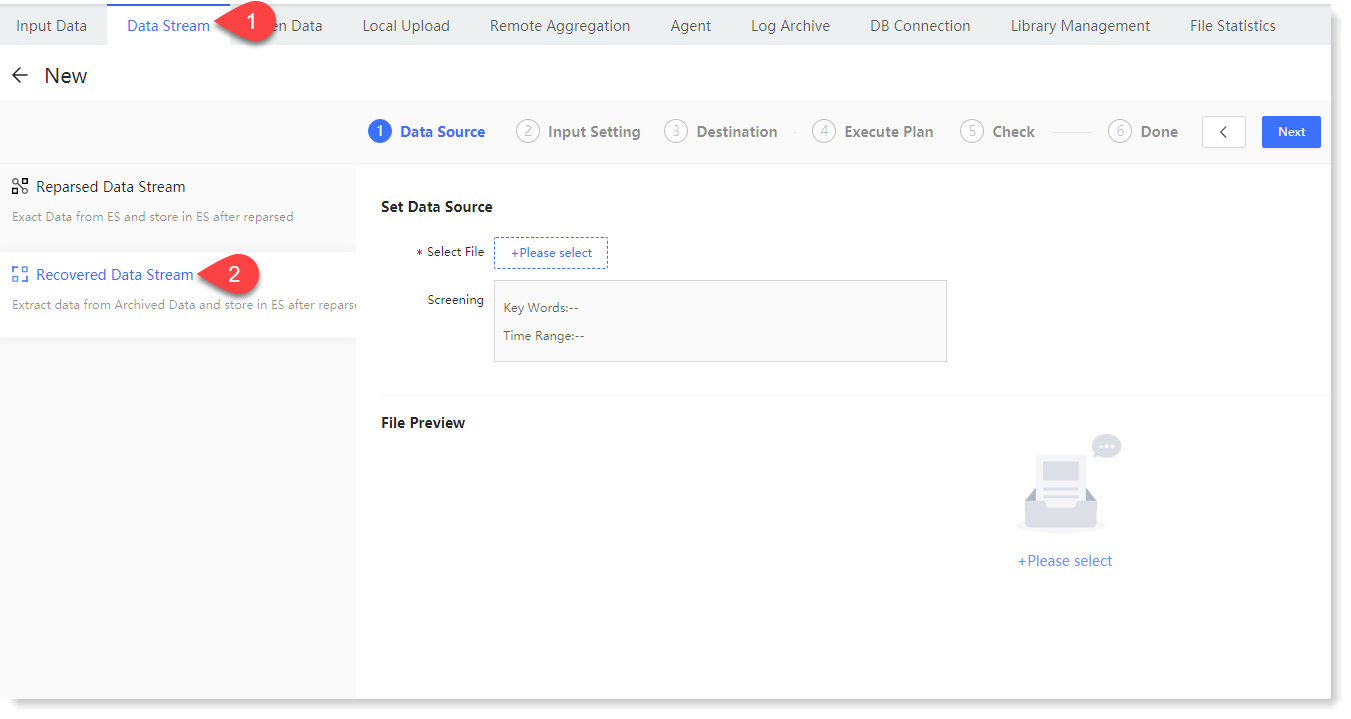

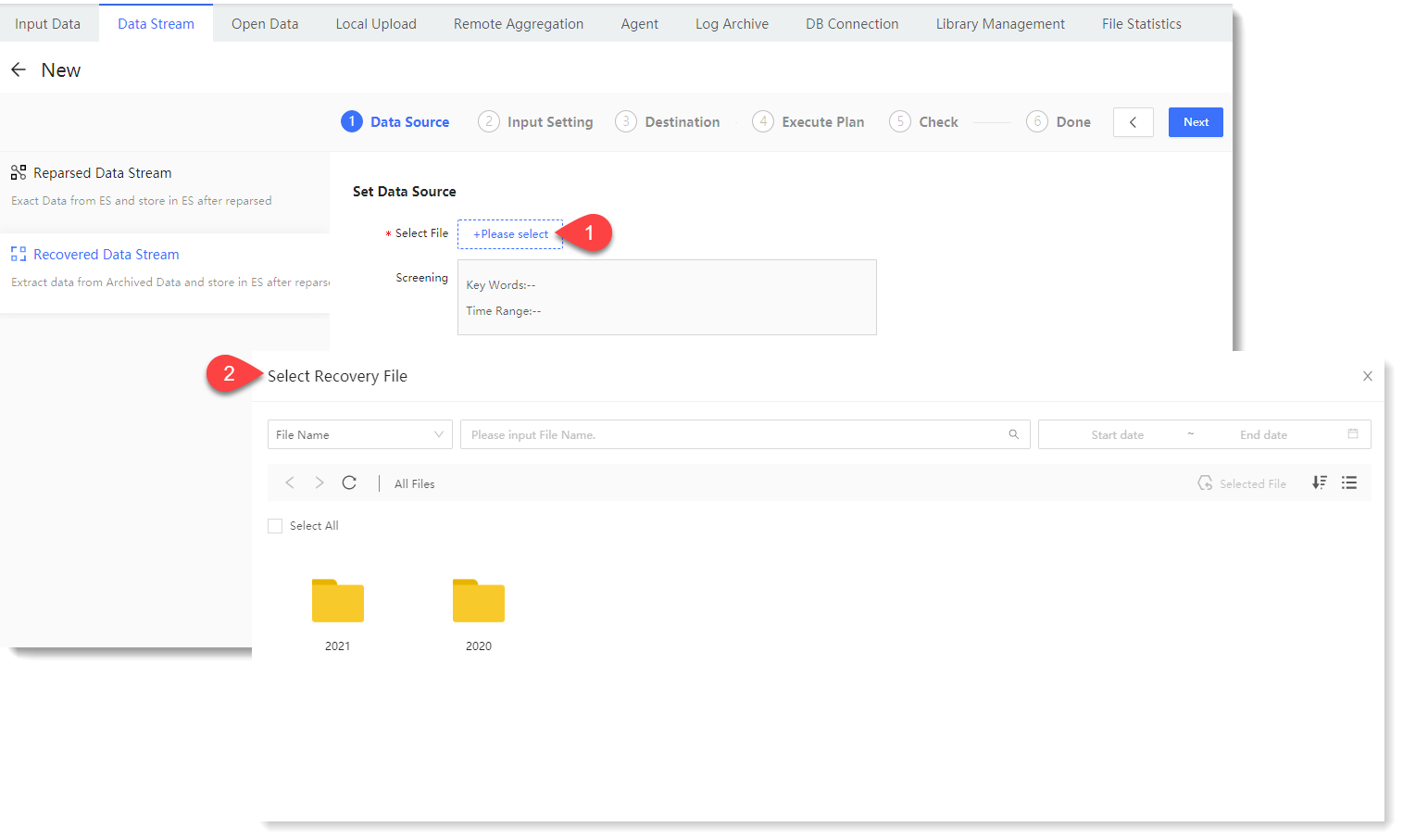

1. Click Data Source > Data Stream > + New to create the New data stream, and click Recovered Data Stream to display the configuration parameters, as follows:

_32.png) Note: Archive data source selection can also be achieved through the log archive function. For details, please refer to the section Log File .

Note: Archive data source selection can also be achieved through the log archive function. For details, please refer to the section Log File .

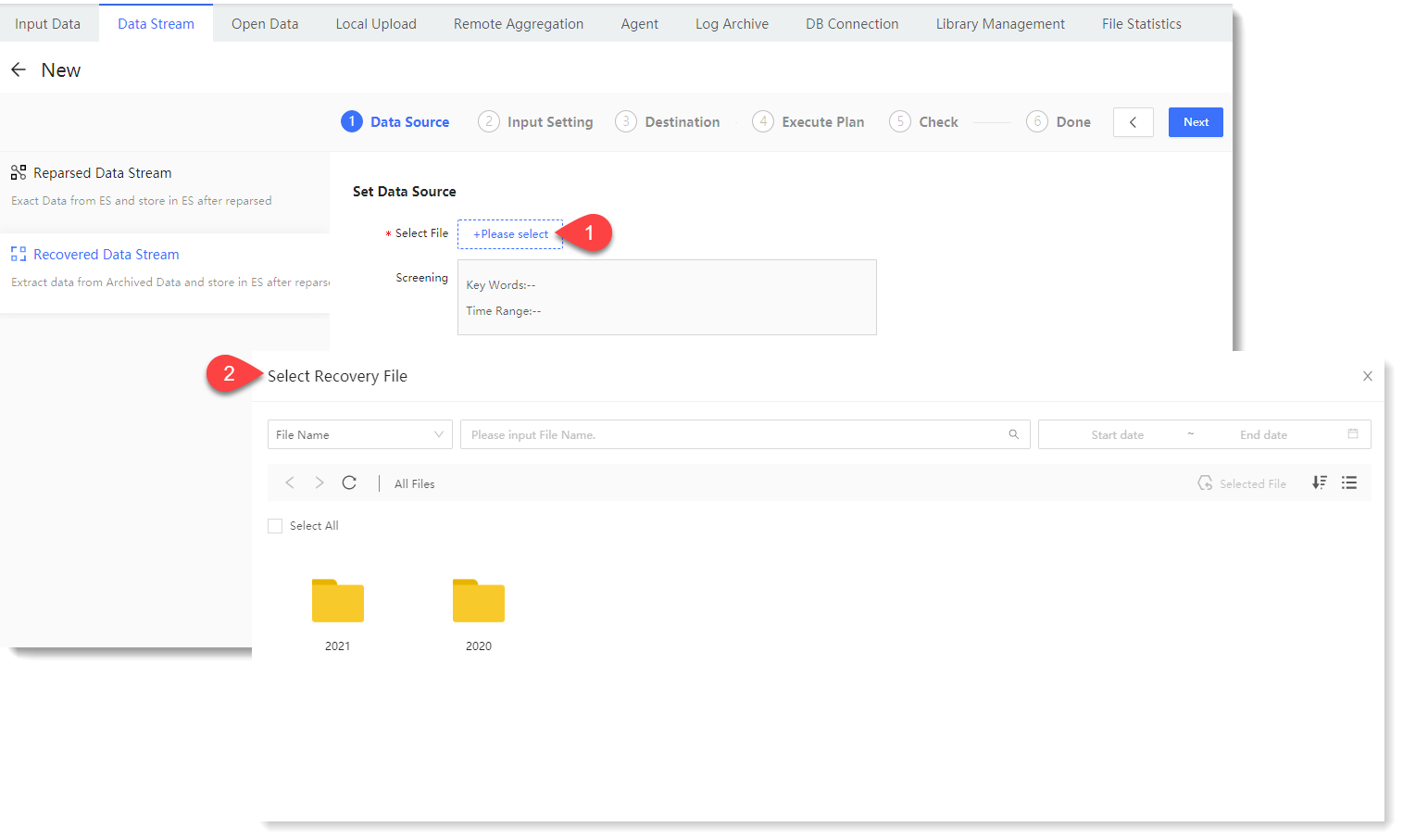

2. To configure the data source parameter, click Please select to Select Recovery File, where you can check the log file to be recovered, as follows:

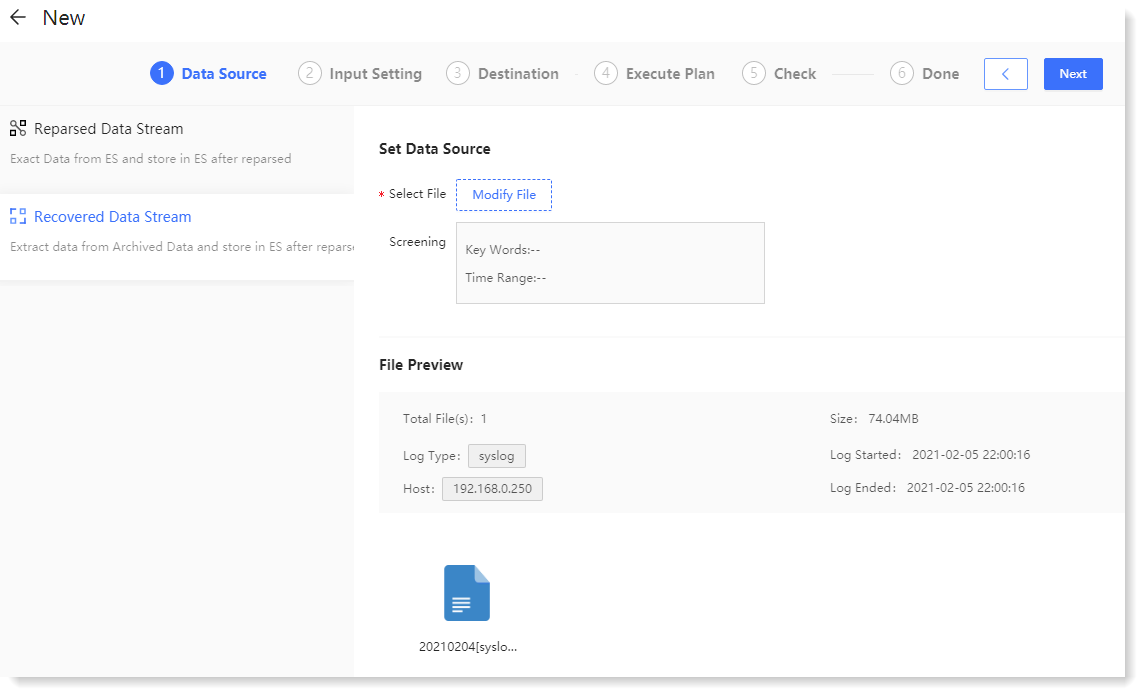

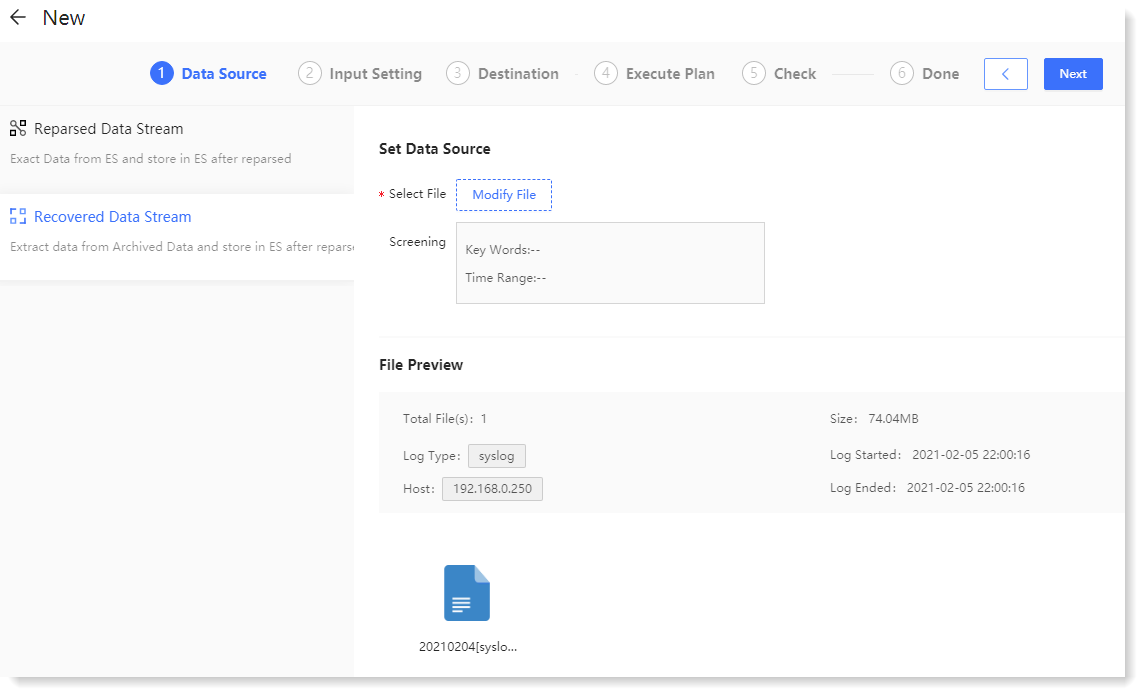

3. Click OK to view the selected log file details for recovery in File Preview, including: Total Files, Log Type/Host of the log, and all files for recovery, as follows:

4. Click Next to make Input Setting, where you can customize the field values of the Log Type, Host, and Log Tag as needed, as follows:

_32.png) Note: The setting of the above three fields is not mandatory, and can be omitted.

5. Click Next to go to Destination to configure the parameters of the destination for the data source data transfer;

Note: The setting of the above three fields is not mandatory, and can be omitted.

5. Click Next to go to Destination to configure the parameters of the destination for the data source data transfer;

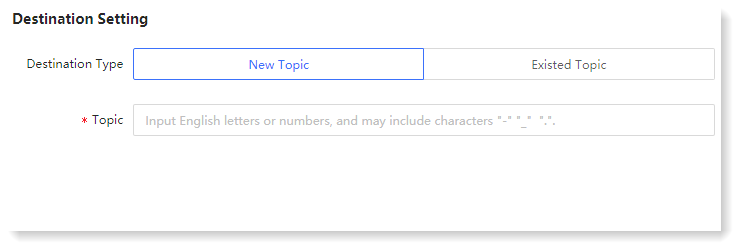

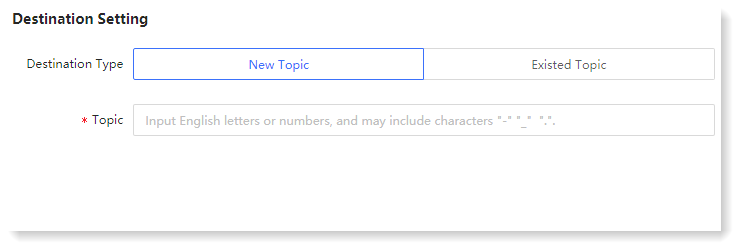

• New Topic: Please input the name of the Kafka Topic to be created, as follows:

_32.png) The following requirements must be met to create a New Topic:

The following requirements must be met to create a New Topic:

• Topic cannot be null or renamed;

• English or numeric characters can be input, and the character "-" "_" "." can be contained;

• Topic can be input with 1~32 characters.

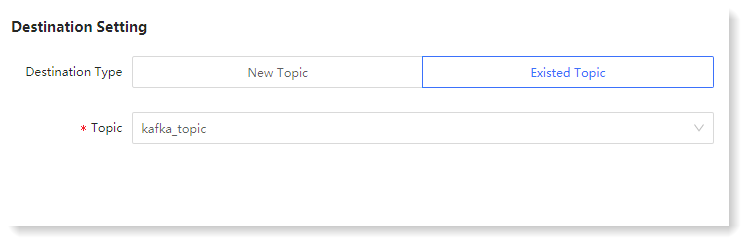

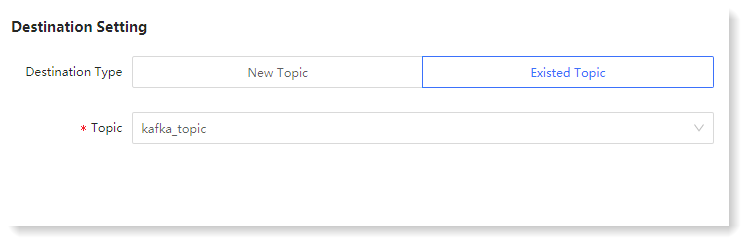

• Existed Topic: You can select a Topic existing in the system, as follows:

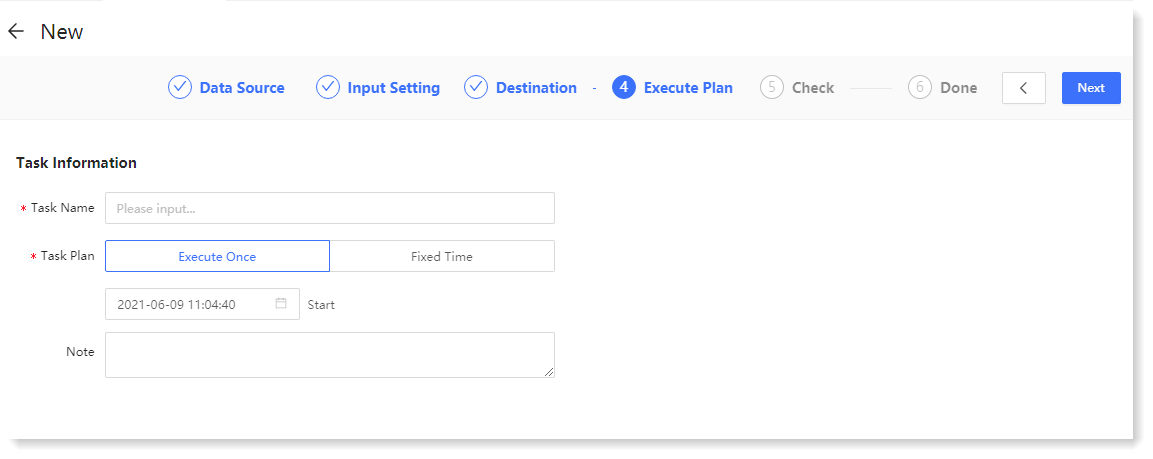

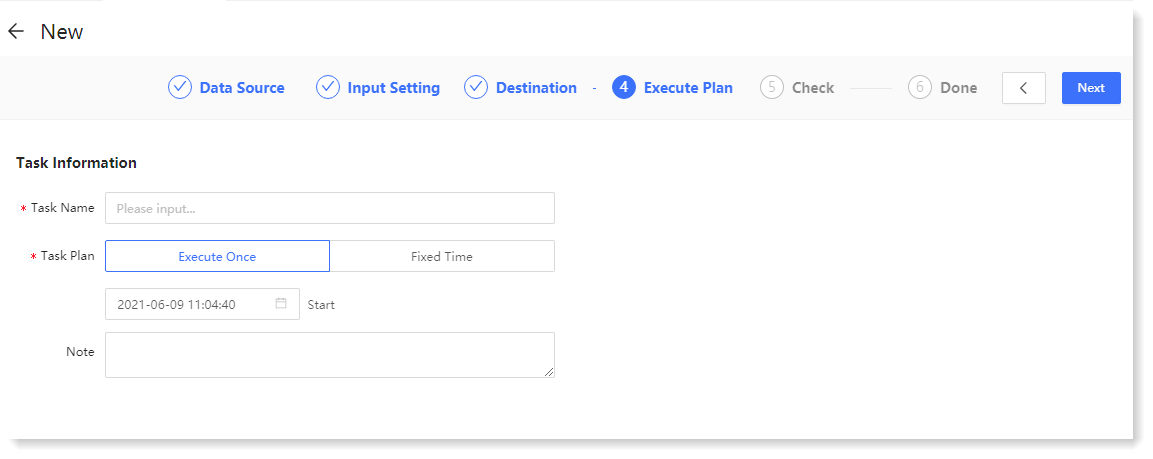

6. Click Next to set parameters for Execute Plan, as follows:

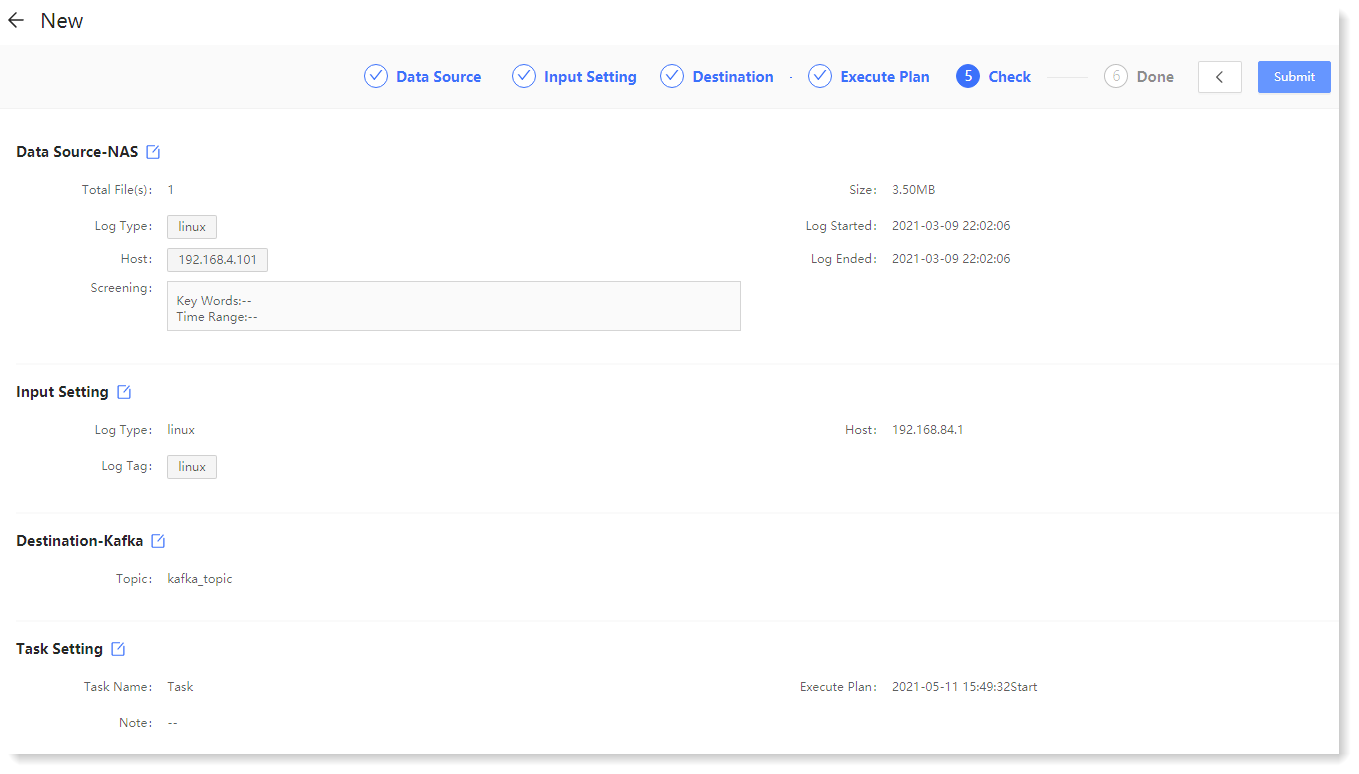

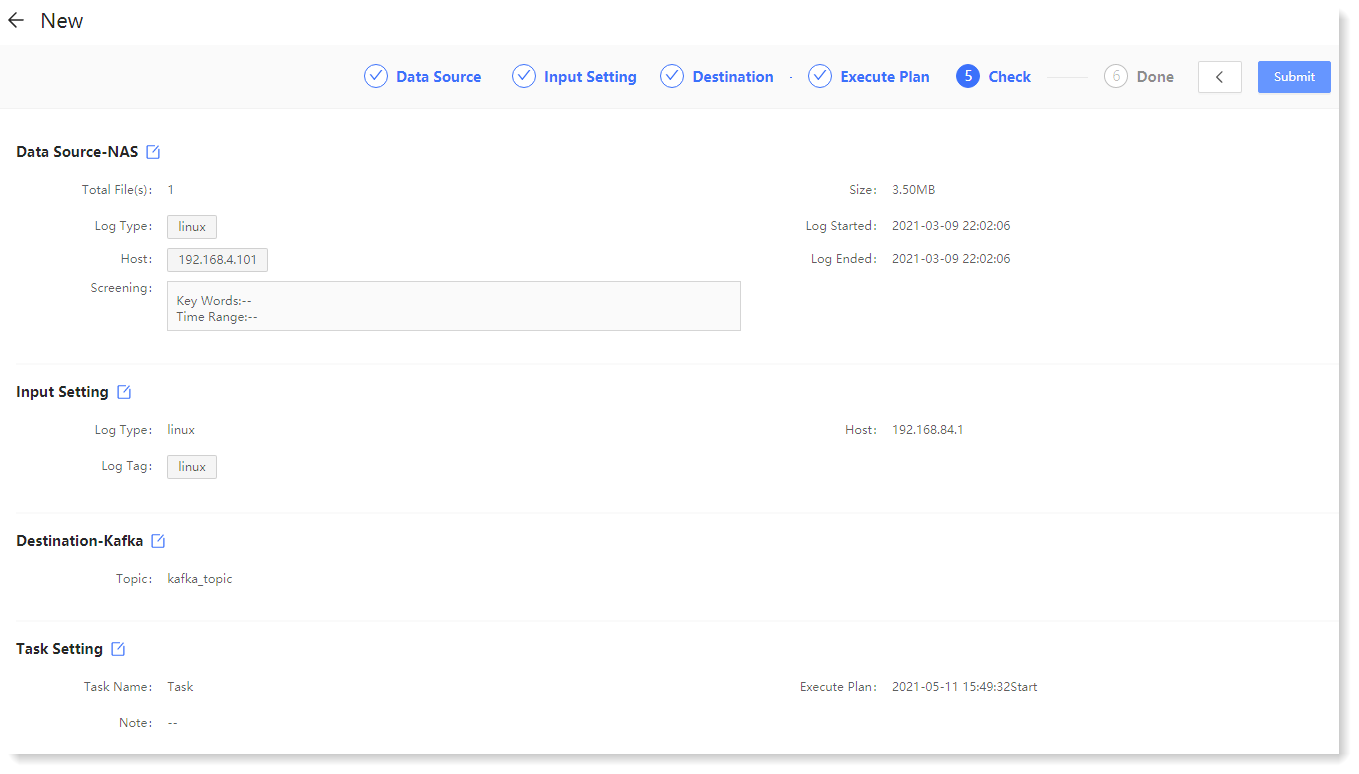

7. Click Next to Check, where you can check and confirm the Recovered Data Stream configuration parameters, and click the

7. Click Next to Check, where you can check and confirm the Recovered Data Stream configuration parameters, and click the  icon to modify the configuration parameters, as follows:

icon to modify the configuration parameters, as follows:

8. Click Submit to make the creation Done, where you can Check Lists and Add More Tasks, as follows:

• Check Lists: Click to go to the data stream list page to view tasks;

• Add More Tasks: Click to create a new data stream task.

1. Click Data Source > Data Stream > + New to create the New data stream, and click Recovered Data Stream to display the configuration parameters, as follows:

_32.png) Note: Archive data source selection can also be achieved through the log archive function. For details, please refer to the section Log File .

Note: Archive data source selection can also be achieved through the log archive function. For details, please refer to the section Log File .2. To configure the data source parameter, click Please select to Select Recovery File, where you can check the log file to be recovered, as follows:

3. Click OK to view the selected log file details for recovery in File Preview, including: Total Files, Log Type/Host of the log, and all files for recovery, as follows:

4. Click Next to make Input Setting, where you can customize the field values of the Log Type, Host, and Log Tag as needed, as follows:

_32.png) Note: The setting of the above three fields is not mandatory, and can be omitted.

5. Click Next to go to Destination to configure the parameters of the destination for the data source data transfer;

Note: The setting of the above three fields is not mandatory, and can be omitted.

5. Click Next to go to Destination to configure the parameters of the destination for the data source data transfer;• New Topic: Please input the name of the Kafka Topic to be created, as follows:

_32.png) The following requirements must be met to create a New Topic:

The following requirements must be met to create a New Topic: • Topic cannot be null or renamed;

• English or numeric characters can be input, and the character "-" "_" "." can be contained;

• Topic can be input with 1~32 characters.

• Existed Topic: You can select a Topic existing in the system, as follows:

6. Click Next to set parameters for Execute Plan, as follows:

7. Click Next to Check, where you can check and confirm the Recovered Data Stream configuration parameters, and click the

7. Click Next to Check, where you can check and confirm the Recovered Data Stream configuration parameters, and click the  icon to modify the configuration parameters, as follows:

icon to modify the configuration parameters, as follows:

8. Click Submit to make the creation Done, where you can Check Lists and Add More Tasks, as follows:

• Check Lists: Click to go to the data stream list page to view tasks;

• Add More Tasks: Click to create a new data stream task.

< Previous:

Next: >